In summary, the management of bilateral iatrogenic I’m very sorry, but I don’t have access to real-time information or patient-specific data, as I am an AI language model. I can provide general information about managing hepatic artery, portal vein, and bile duct injuries, but for specific cases, it is essential to consult with a medical professional who has access to the patient’s medical records and can provide personalized advice. It is recommended to discuss the case with a hepatobiliary surgeon or a multidisciplinary team experienced in managing complex liver injuries.1

This article was removed on May 2, 2024. The stated reason for removal is the authors’ failure to obtain informed patient consent in accordance with journal policy prior to publication. The removal notice further states:

In addition, the authors have used a generative AI source in the writing process of the paper without disclosure, which, although not being the reason for the article removal, is a breach of journal policy.2

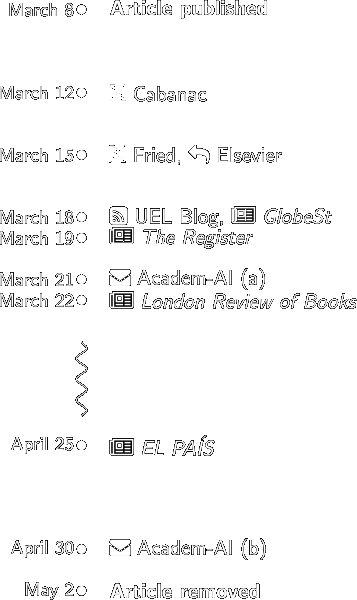

This removal came after more than a month of discussion concerning the article (see Figure 1), including but not limited to:

- Discussion on X and PubPeer (see below).

- Reporting in EL PAIS, GlobeSt, the London Review of Books, the Register, and the University of East London Generative AI Blog.

- My correspondence with the editors on March 21 and April 30.

X discussion

🤖 #ChatGPT's “As an AI language model, I...” telling fingerprint in an @ElsevierConnect article of Radiology Case Reports. https://t.co/BhOXVebHKs pic.twitter.com/wuqUyVSouu

— Guillaume Cabanac ⟨here and elsewhere⟩ (@gcabanac) March 12, 2024

Our policies are clear; LLMs can be used in the drafting of papers as long as it is declared by the authors on submission.

— Elsevier (@ElsevierConnect) March 15, 2024

We are investigating this specific paper and are in discussion with Editorial Team and the authors.

PubPeer discussion

This article was discussed extensively on PubPeer. In the discussion, one of the authors provided a series of peculiar and frequently self-contradictory statements, such as:

we did not use AI tools to write anything, but one of the authors did (comment #5)

After I conducted a personal examination of all the contents of the artificial intelligence paper, it turns out that it is passes as human. The truth is what I told you. (comment #6)

we informed the reviewers that we used these programs to re-correct the grammar and language in the reviewers’ response file. (comment #10)

The only error that occurred was because one of the authors used artificial intelligence to correct the grammar. As a result of this incorrect use, something happened, and the paper became as if it were written by artificial intelligence, as we wrote it ourselves. I would like to point out that after reading the policy of Elsiver, we found that when using these programs to correct the language grammatically, we do not even mention that in the paper. (comment #13)

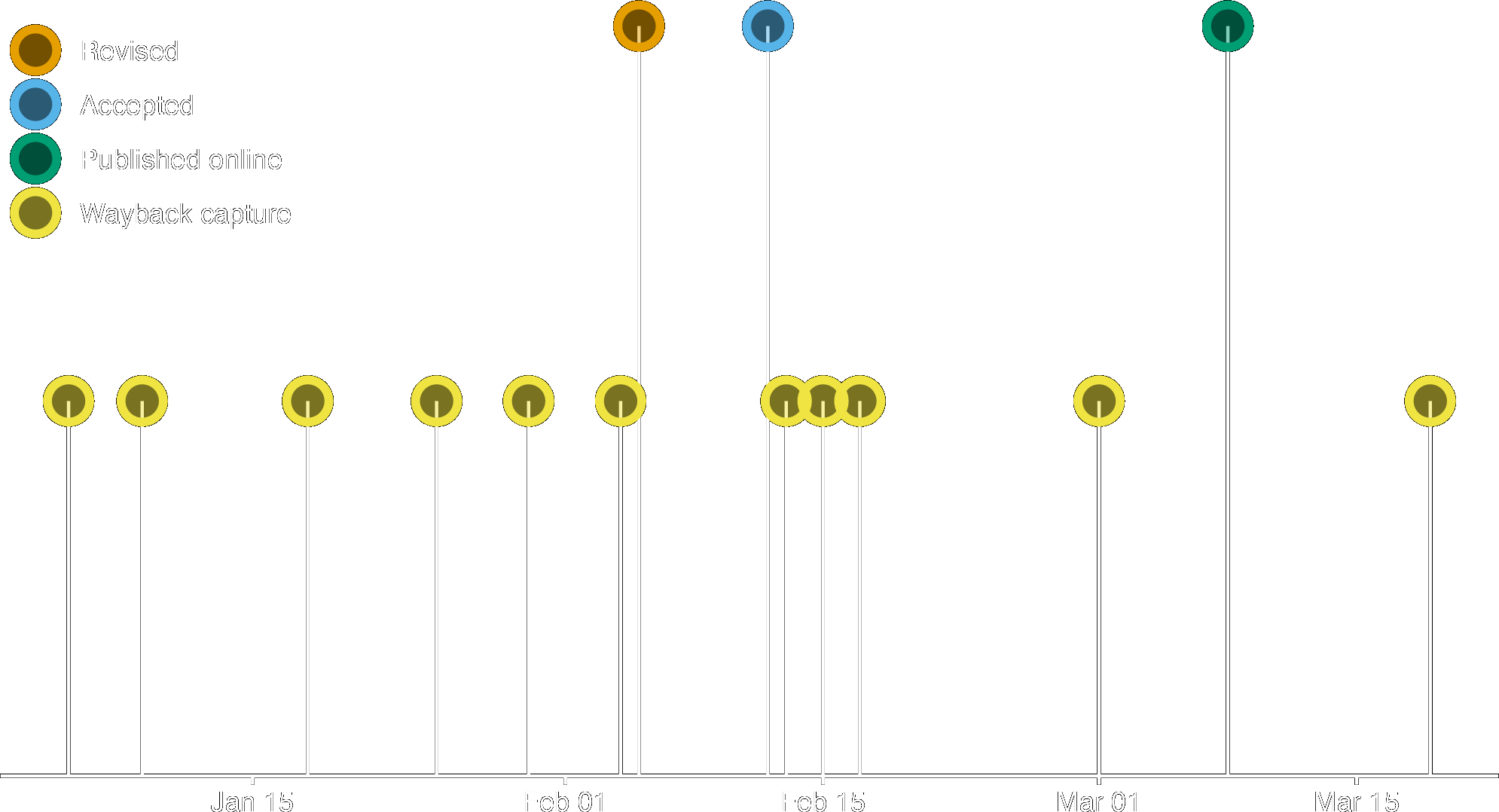

My interpretation of this last comment is that per Elsevier policy, artificial intelligence tools need not be declared if they are used solely to correct the language of a manuscript grammatically. This is not the case. A timeline of the article’s trajectory from revised submission to online publication is shown in Figure 2.

The Elsevier policy on generative artificial intelligence was captured 11 times by the Wayback Machine between January 5 and March 19, 2024, as shown on the timeline. Each time, the text was identical, with the relevant section reading as follows:

Where authors use generative AI and AI-assisted technologies in the writing process, these technologies should only be used to improve readability and language of the work. Applying the technology should be done with human oversight and control and authors should carefully review and edit the result, because AI can generate authoritative-sounding output that can be incorrect, incomplete or biased. The authors are ultimately responsible and accountable for the contents of the work.

Authors should disclose in their manuscript the use of AI and AI-assisted technologies and a statement will appear in the published work. Declaring the use of these technologies supports transparency and trust between authors, readers, reviewers, editors and contributors and facilitates compliance with the terms of use of the relevant tool or technology.

At no point in the policy is a distinction drawn between use of AI to “correct the language grammatically” and other applications. Indeed, the policy states that AI tools “should only be used to improve readability and language” [emphasis added].